UNFILTERED FOUNDERS!

Ok, it’s been a bit (again!), but I’ve been getting Unfiltered Founders going.

That’s my new interactive podcast on X/ Twitter for founders - it’s in beta now, but will soon be released over a BUNCH of channels.

You can ask questions directly or anonymously, and get them answered LIVE.

All questions.

Any questions.

(No troll questions.)

All the embarrassing stuff. Not the “How do I deal with all the revenue I’m getting?” or “How do I deal with all of the competing investor offers?”

The real stuff. The “I hate my co-founder, what do I do?” “I slept with my investor, and I don’t know how to handle raising our next round,” “I can’t find customers.” “My churn rate is over 80% and rising.” “I don’t understand the terms of the fundraising document.”

Whatever you really need to know.

There’s a community to join where you can discuss the episodes, play games, and more.

Join Unfiltered Founders Community- LINK HERE!

The next episode of Unfiltered Founders is on THURSDAY DECEMBER 19 at 10 AM PACIFIC LINK HERE. Ask questions, get answers. Play games.

The topic for this week: True vs reluctant founders - what they are and how you change what you do with revenue depending on which you are.

LLMs need to evolve - what this means for you

Now let’s get to the meat of this issue: The coming problem with LLMs in AI.

(Some of you might be expecting a tariff discussion - but that’s the next issue. This one has reached a peak a bit earlier than I expected, so you’re getting this one first.)

What’s the problem? Well, large language models are hitting a wall.

They have been scaling based on massive consumption of two big things: human-created data, and energy. Now they’re running out of data, and the ever-increasing costs are generating diminishing returns. Ilya Sutskever, CEO of Anthropic, addressed this topic in a November TED talk, on which OpenAI declined to comment.

Costs are rising, and returns are dropping, soon to be dropping precipitously. Massive power shortages are making the entire problem worse. In fact, OpenAI is bleeding out cash, and around early September (-ish) was set to post a $5 bil loss and look at bankruptcy at the end of this year. It then announced a life-saving $6.6 billion in investment at a $157 billion valuation, and $4 billion in unsecured (??!) credit- even though this only lasts them another year.

If you thought blockchain was expensive in terms of energy, you should look at AI. OpenAI is - what’s the opposite of a cash cow? That 35 year old guy who won’t leave his parents’ basement, won’t look for a job, won’t consider going to college, and just drinks beer, eats his parents’ food and plays Call of Duty all day? That.

What does this mean?

Well, a few things.

First, the age of big LLM models is ending. What’s next? Look for models that actually do something with that data. We have to move to higher end AI models. We’re at the end of what a bunch of generative search engines can do and simple training with bots.

Second, we’ll see a different batch of winning models. There will be a lot of small winners who are specialized in specific post-training applications. (Post-training is the period after you feed the model information and refine the model in a loop of training in a specific field.) These are fine-trained models, retrieval augmented generation, cognitive AI, etc.

Third, we’ll see the growth of inference engines built of massive servers that will do the next phase of computation. While the last phase has been about raw input and learning, this new phase is about using that input and creating predictions.

You’ll also see more focus on something called “test-time compute” - or the ability to consider multiple possibilities and outcomes simultaneously, looking for the most optimal solution within computational restraints (something humans cannot do). When this pairs with quantum computing, incidentally, this will vastly outpace human thought and decision making potential. This will increase processing time (for now), but will result in better outcomes - provided the original data was correct. And that’s a BIG assumption, given some of the carelessness in acquiring data of these models.

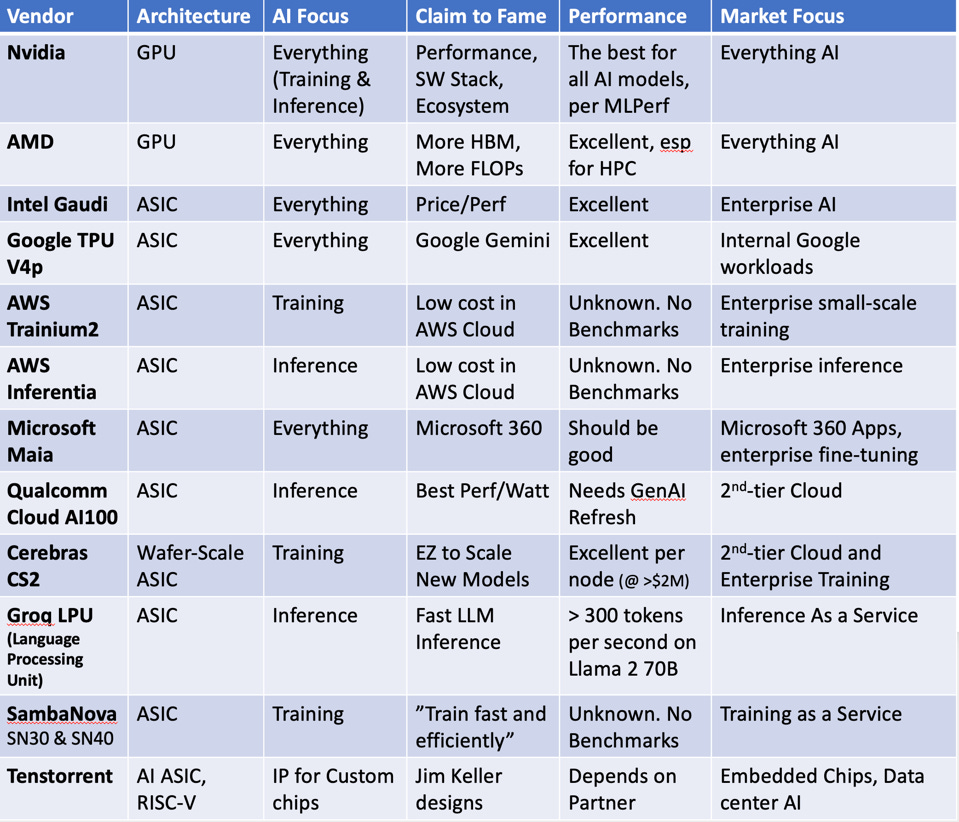

This means new chipmakers will take the lead. Despite Nvidia’s claims that the Blackwell chips will solve the inference cloud demand, a new rush of competition is coming, and its unlikely Nvidia is going to recover its lead. We can already see that Nvidia’s stock price is beginning to falter (i.e., NVDA enters technical correction, down 15% from November high. Yahoo Finance, December 17, 2024)

Here’s the positioning of competitors as of February 2024. Note the companies already in the inference chip ad hardware space ready to scale.

Credit: Forbes.com

Note that players like Broadcom and Marvell aren’t even included in this list, so you should look broadly at the market when investigating.

The current stock market is heavily weighted by Nvidia and other LLM focused companies. The market is due for a correction (and I think we’re starting to see it). The LLM-focused companies (either offering products, competitors or hardware) will soon start to fall hard, and dip is usually a good time for them to start taking a turn.

Remember market drops are also opportunities - for undervalued buys and shorting. DO YOUR RESEARCH. If you don’t know how to do it, LEARN HOW FIRST.

Following my X spaces and this newsletter is a great start. They’re free, and will genuinely help.

I’ll be starting workshops this year to walk people through key concepts and how to apply them, from AI to real world asset tokenization to new forms of payment to understanding finance and building net worth. Keep an eye out for this.

Check out my upcoming spaces!

I’ve been asked many times to do a sponsored space, but had a REALLY hard time figuring out how to do one that worked with my ethics. I knew I needed:

To be able to ask any question I want, no restrictions

No marketing people from the company or project side

An actual reason for the space

No payment in equity or tokens

It took a LONG to be able to figure this out, and get the right project for it, but we finally did it!

I’m hosting my first sponsored space on X/ Twitter with AVIATOR on Thursday Decembe 26 at 12 pm pacific Link HERE.

The purpose is to serve as proxy for investors and users of the platform. I’m going to do a deep dive with the founders of Aviator, and ask the questions

POTENTIAL INVESTORS AND USERS SHOULD BE ASKING.

I’m doing a lot of leg work. No questions out of bounds, no fluff. And I’m not paid in tokens or equity.

I think it should be extremely useful and interesting, so please join!

Unfiltered Founders is discussed at the top, and is recorded on X/ Twitter on Thursdays at 10 am pacific. The next episode link is HERE.

Please join - you’ll love it! It’s a very laid back atmosphere, and you can submit anonymous questions to @UnfilterdFoundr on X/ Twitter. It’s my show account, I am @alexdamsker.

And, as always, we have THE PITCH SPACE, every Sunday at 11 am pacific Link HERE. Learn my QUICKPITCH method to develop relationships with potential investors and cofounders from your introduction.

Get great feedback from investors and exited founders. It’s fun, you’ll learn, and yes - people get funded. But the most important thing is: you and your company will get better just by being there and listening.

What’s bad about that? COME JOIN!